Generative AI has made convincing deepfakes cheaper than a cup of coffee. Organisations such as Samsung, IBM and Booz Allen are now investing in startups that can help detect them.

“ There are two kinds of clients,” says Ben Colman, CEO of deepfake cybersecurity startup Reality Defender.

“One of them comes in and says we need this because we know the problem is getting worse. The other says it’s not an issue, and then when it is, they call us at four in the morning.”

The post-ChatGPT advent of generative AI dramatically lowered the technical barriers to creating deepfakes – real-looking video and audio content, even in real time, that is, in fact, completely fake . For less than the price of a coffee, someone can make a fake piece of media more convincing than anything people would have seen just a couple of years ago. All you need to make a fake video is a still photo, and all you need for a high-fidelity voice clone is a few seconds of recording.

Gen AI-enabled fraud could lead to losses of up to $40bn by 2027 in the US alone, more than triple the level of 2023, according to Deloitte. In one high-profile incident, engineering firm Arup lost $25m when a Hong Kong-based staff member thought they were on a video conference with the company’s Britain-based CFO, who instructed them to send it.

Incidents that make the news are just the tip of the iceberg. Too many organisations are yet to reckon with the scope of the problem, and even many of those who have been affected by it are not taking appropriate action.

“All the stuff that’s really happening, you don’t hear about, because the folks that have been defrauded don’t want to admit it for service-level agreement or insurance reasons, but it’s unfortunately happening every single day,” says Colman. “If a company claims they haven’t had it yet, they either are lying or they just don’t know that it’s already happened.”

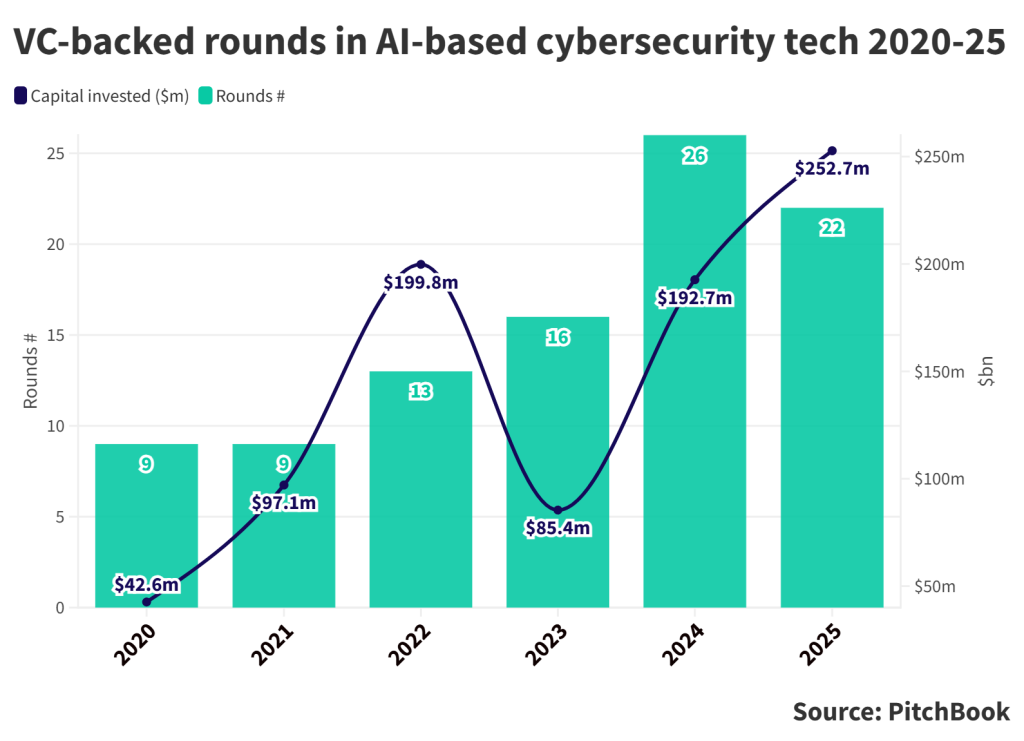

Investors are taking note, too. While data on deepfake and gen AI-specific rounds is hard to come by, it is clear that despite taking a sharp dip in dollar value between 2022 and 2023 – correlating with the venture downturn – VC-backed rounds for AI-based cybersecurity technology more generally have been rising rapidly both in terms of deal flow and capital invested. This year is already on track to eclipse the number of deals from 2024, and the investment volume has already dwarfed last year’s.

Reality Defender raised a $33m series A last year from corporate investors like IBM Ventures, Booz Allen Ventures, Samsung NEXT and Accenture.

Other recent corporate-backed rounds have included those for companies like authentication platform provider against gen AI threats GetReal Security this past March, disinformation and deepfake detection technology developer Alethea last year, multi-channel deepfake simulation training company Adaptive AI — which earlier this month raised $12m from OpenAI — among others. Corporates that have recently backed startups in this space include Cisco, Capital One and Alphabet, among others.

Sophistication among thieves

“ Deepfake-enabled fraud is on steroids at the moment. Every other person you meet seems to have encountered it in some way,” says Aarti Samani, CEO of deepfake fraud resilience consultancy Shreem Growth Partners.

These are not just solo gambits. Fraud-as-a-service is a real thing with elaborate infrastructure built around it. Making the deepfake itself is the easy part, the real work is in creating the narrative that makes that deepfake believable in context. The groups that carry out these acts have enterprise-like structures to make that happen, complete with researchers, psychologists, technologists and employees.

Fake worker fraud – what organisations are seeing more of now are gambits like fake worker fraud, where candidates for a job can make it through a multi-round hiring process, all the while being fake, albeit perhaps with real documentation that malicious actors buy from real people. LinkedIn profiles, CVs, and videocall avatars are all generated by AI. Once inside, they can wreak havoc – exfiltrating sensitive information of employee and customer data.

“ This is a massive problem in the US right now, there are over 300 companies who are employing fake workers [who may have duped their way in using AI and deepfake technology] and they don’t even know about it. And now they’re in the UK and Europe, where there is not enough awareness about it,” says Samani.

Companies tend to have strong know-your-customer processes, but weaker know-your-employee processes.

“We are validating an individual who may transact with us once or twice a year, but we are not validating an individual whom we are bringing into our environment and handing over credentials to give access to various sensitive areas in our organisation.”

The same exists on the other side, where a fake employer can put real candidates through their paces, “hire” them, and leave them in the lurch while taking all their information for nefarious purposes.

CVC investors we need your help!

The corporate venture industry has never been more influential — but it is also changing rapidly. Help us track these shifts by adding in the details of your unit to our annual survey.

All responses are anonymised and we share the benchmarking data back with all respondents.

Investment opportunities

Today, much of the fake communications are entirely fake – they are built from the ground up using synthetic voices or images, and are therefore easier to detect.

However, one of the biggest upcoming challenges, says Colman, will be in combating things like hybrid content that is only partially altered by AI, or that has multiple layers of AI enhancement, or that has gone through multiple different formats, making it more difficult to peel back the layers.

There is also a lot of green space and investment opportunity in red teaming – simulating attacks so organisations can find weak spots.

Demonstrating a track record with clients in high-risk sectors like finance is also attractive to investors. When explaining the reasoning for its investment in Reality Defender, Samsung NEXT investor Carlos Castellanos, who oversaw the CVC’s participation in the series A, noted the startup’s reputation in hard-hit sectors.

“The company’s sophisticated approach has gained significant traction in the financial sector, with major U.S. banks implementing the technology for voice fraud detection in call centres, video verification during customer onboarding, and executive impersonation monitoring to safeguard brand reputation,” said Castellanos.

People are the weak link

“It is harder for the perpetrators to break into security technology, but the easier way is to break into people, because people haven’t been hardened yet,” says Samani.

Plenty of companies have done rudimentary training on spotting phishing emails and the like, but spotting fake voices on the phone or on videoconferences is a different game, not least because a critical mass of executives still think the problem is years away, not a real and present danger.

If you think of a typical AI face overlaid onto a real person’s during a video call, you might think that the mouth wouldn’t move properly and would be stiff in places such that it would be easy to recognise. In reality, especially when you have other effects like a blurred background, small glitches easily fade into the background and evade the suspicion of many.

For organisations, one of the main problems is figuring out where the budget sits for countering fraud. At too many organisations, says Samani, it sits in a no man’s land because they have a hard time defining what kind of problem it is.

Is it a cybersecurity issue? A recruitment issue? A risk and compliance issue? Depending on the answer, teams are happy to not take ownership of it.

“ Typically, I get told by the chief information security officer that they already have a training in place for phishing emails, et cetera. HR don’t really think security should be part of their budget, even though recruitment fraud is a big problem. Marketing folks think it’s not part of their budget, even though their website is being cloned and their people are being cloned,” says Samani

“In reality, it’s all of the above. There is no black and white.”

Playing catchup

“ The best users of technology, unfortunately, are fraudsters. They’re competing under a single KPI, which is just the dollar value of fraud,” says Colman.

On the dark side of the ledger, innovation is moving fast, both in terms of technology and business model. The people defending against fraud are fighting an uphill battle, playing catchup against an ever-evolving enemy.

“ The last stat I read was that there is a five-year difference between the attacker and the defender,” says Samani.

This is especially true for things like audio, for example, which has fewer markers for detection than video. Long delays in conversation with a bot are a thing of the past, and adding something as simple as ambient sound to an audio deepfake makes it more realistic to detection technology.

”So much of the world’s decisions, financial and otherwise, are being made over audio channels. Audio hasn’t really changed a lot – video has – yet it’s this kind of boomerang back to more traditional audio-based confidence scams,” says Colman.

What makes audio such an effective attack surface is not so much the difficulty in combating it, but rather its accessibility. It’s something you can do directly on your phone cheaply and deploy it at scale through channels that people already trust for major transactions, without the cloud compute resources that video might need.

Additionally, the time delay that older speech generation tools had between input and output – between 4-7 seconds – has been virtually eliminated by LLMs, making it much harder for someone on the phone to figure out they’re talking to a fake person.

Some of the most worrying use cases for this kind of deception are larger than the finances of a single company. Images of a fake explosion at the Pentagon in Washington D.C. in early 2023 set off a ten-figure stock market tumble which, despite its quick recovery, highlights how cheaply produced fake media – spread across algorithmically-driven social media platforms that are themselves replete with bad or credulous actors – can move entire economies.

Fernando Moncada Rivera

Fernando Moncada Rivera is a reporter at Global Corporate Venturing and also host of the CVC Unplugged podcast.